Lambda表达式原理

简单例子:

@FunctionalInterface

interface Print<T> {

public void print(T x);

}

public class Lambda {

public static void PrintString(String s, Print<String> print) {

print.print(s);

}

public static void main(String[] args) {

PrintString("test", (x) -> System.out.println(x));

}

}

LambdaMetafactory会将Lambda表达式动态编译成内部类,类似动态代理,生成实现接口的类

public static CallSite metafactory(MethodHandles.Lookup caller,

String invokedName,

MethodType invokedType,

MethodType samMethodType,

MethodHandle implMethod,

MethodType instantiatedMethodType)

throws LambdaConversionException {

AbstractValidatingLambdaMetafactory mf;

mf = new InnerClassLambdaMetafactory(caller, invokedType,

invokedName, samMethodType,

implMethod, instantiatedMethodType,

false, EMPTY_CLASS_ARRAY, EMPTY_MT_ARRAY);

mf.validateMetafactoryArgs();

return mf.buildCallSite();

}

内部类反编译类似如下:

@FunctionalInterface

interface Print<T> {

public void print(T x);

}

public class Lambda {

public static void PrintString(String s, Print<String> print) {

print.print(s);

}

private static void lambda$0(String x) {

System.out.println(x);

}

final class $Lambda$1 implements Print{

@Override

public void print(Object x) {

lambda$0((String)x);

}

}

public static void main(String[] args) {

PrintString("test", new Lambda().new $Lambda$1());

}

}

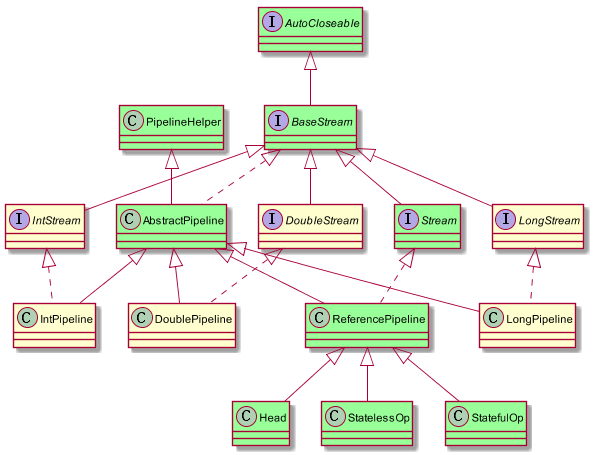

stream原理

List<Integer> list = new ArrayList<>(Arrays.asList(1,2,3,4));

List<Integer> res = list.stream().filter(x -> x % 2 == 0).collect(Collectors.toList());

StreamSupport方法,将Collection转换为steam(ReferencePipeline实现类)

public static <T> Stream<T> stream(Spliterator<T> spliterator, boolean parallel) {

Objects.requireNonNull(spliterator);

return new ReferencePipeline.Head<>(spliterator,

StreamOpFlag.fromCharacteristics(spliterator),

parallel);

}

ReferencePipeline实现filter方法,将中间的调用链转换为StatelessOp(ReferencePipeline的子类)

@Override

public final Stream<P_OUT> filter(Predicate<? super P_OUT> predicate) {

Objects.requireNonNull(predicate);

return new StatelessOp<P_OUT, P_OUT>(this, StreamShape.REFERENCE,

StreamOpFlag.NOT_SIZED) {

@Override

Sink<P_OUT> opWrapSink(int flags, Sink<P_OUT> sink) {

return new Sink.ChainedReference<P_OUT, P_OUT>(sink) {

@Override

public void begin(long size) {

downstream.begin(-1);

}

@Override

public void accept(P_OUT u) {

if (predicate.test(u))

downstream.accept(u);

}

};

}

};

}

构造方法最后会使用AbstractPipeline的方法,指定nextStage、previousStage、sourceStage等等

AbstractPipeline(AbstractPipeline<?, E_IN, ?> previousStage, int opFlags) {

if (previousStage.linkedOrConsumed)

throw new IllegalStateException(MSG_STREAM_LINKED);

previousStage.linkedOrConsumed = true;

previousStage.nextStage = this;

this.previousStage = previousStage;

this.sourceOrOpFlags = opFlags & StreamOpFlag.OP_MASK;

this.combinedFlags = StreamOpFlag.combineOpFlags(opFlags, previousStage.combinedFlags);

this.sourceStage = previousStage.sourceStage;

if (opIsStateful())

sourceStage.sourceAnyStateful = true;

this.depth = previousStage.depth + 1;

}

ReferencePipeline.collect方法

@Override

@SuppressWarnings("unchecked")

public final <R, A> R collect(Collector<? super P_OUT, A, R> collector) {

A container;

if (isParallel()

&& (collector.characteristics().contains(Collector.Characteristics.CONCURRENT))

&& (!isOrdered() || collector.characteristics().contains(Collector.Characteristics.UNORDERED))) {

container = collector.supplier().get();

BiConsumer<A, ? super P_OUT> accumulator = collector.accumulator();

forEach(u -> accumulator.accept(container, u));

}

else {

//主要入口

container = evaluate(ReduceOps.makeRef(collector));

}

return collector.characteristics().contains(Collector.Characteristics.IDENTITY_FINISH)

? (R) container

: collector.finisher().apply(container);

}

AbstractPipeline.evaluate方法如下

final <R> R evaluate(TerminalOp<E_OUT, R> terminalOp) {

assert getOutputShape() == terminalOp.inputShape();

if (linkedOrConsumed)

throw new IllegalStateException(MSG_STREAM_LINKED);

linkedOrConsumed = true;

//并行用ReduceOp.evaluateParallel,否则ReduceOp.evaluateSequential

return isParallel()

? terminalOp.evaluateParallel(this, sourceSpliterator(terminalOp.getOpFlags()))

: terminalOp.evaluateSequential(this, sourceSpliterator(terminalOp.getOpFlags()));

}

ReduceOp.evaluateParallel调用helper的wrapAndCopyInto

@Override

public <P_IN> R evaluateSequential(PipelineHelper<T> helper,

Spliterator<P_IN> spliterator) {

return helper.wrapAndCopyInto(makeSink(), spliterator).get();

}

@Override

final <P_IN, S extends Sink<E_OUT>> S wrapAndCopyInto(S sink, Spliterator<P_IN> spliterator) {

copyInto(wrapSink(Objects.requireNonNull(sink)), spliterator);

return sink;

}

//先转换成sink链

@Override

@SuppressWarnings("unchecked")

final <P_IN> Sink<P_IN> wrapSink(Sink<E_OUT> sink) {

Objects.requireNonNull(sink);

for ( @SuppressWarnings("rawtypes") AbstractPipeline p=AbstractPipeline.this; p.depth > 0; p=p.previousStage) {

sink = p.opWrapSink(p.previousStage.combinedFlags, sink);

}

return (Sink<P_IN>) sink;

}

//真正执行循环:spliterator.forEachRemaining

@Override

final <P_IN> void copyInto(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator) {

Objects.requireNonNull(wrappedSink);

if (!StreamOpFlag.SHORT_CIRCUIT.isKnown(getStreamAndOpFlags())) {

wrappedSink.begin(spliterator.getExactSizeIfKnown());

spliterator.forEachRemaining(wrappedSink);

wrappedSink.end();

}

else {

copyIntoWithCancel(wrappedSink, spliterator);

}

}